API rate limiting is a mechanism web servers or online services use to control the number of requests a client can make within a specific period. The purpose of rate limiting is to prevent abuse, protect server resources, ensure fair usage, maintain service reliability, and maintain quality of service among all API users.

Various challenges can arise for both API providers and users if proper rate limiting is not put in place. Here are some of the key challenges:

Abuse and Overload:

DDoS Attacks: Without rate limiting, APIs are more susceptible to Distributed Denial of Service (DDoS) attacks where malicious actors can flood the server with a large number of requests, overwhelming its resources.

Resource Exhaustion: Unrestricted access can lead to the exhaustion of server resources, such as CPU, memory, and bandwidth, affecting the performance of the entire system.

Unfair Resource Consumption:

Resource Hogging: Certain users or applications may consume an excessive amount of resources, leading to degraded service quality for other users.

Data Scraping: Malicious users might exploit unrestricted access to scrape large volumes of data, causing unnecessary load on the server and potentially violating terms of service.

Service Reliability:

Server Downtime: Overloaded servers may experience downtime or become unresponsive, affecting the availability of the service for all users.

Performance Degradation: A high volume of concurrent requests can result in slower response times for legitimate users.

Cost Implications:

Infrastructure Costs: Uncontrolled API usage can lead to increased infrastructure costs for the API provider, as they may need to scale up resources to accommodate the unexpected demand.

Bandwidth Costs: Higher-than-anticipated bandwidth usage can result in increased costs for the API provider.

Security Concerns:

Brute Force Attacks: Without rate limiting, attackers may attempt brute force attacks on authentication mechanisms, trying to gain unauthorized access by making an excessive number of login attempts.

Data Exposure: Unrestricted access can lead to unauthorized access to sensitive data, compromising the confidentiality and integrity of information.

Quality of Service:

Poor User Experience: Without rate limiting, the overall quality of service for legitimate users may suffer due to increased latency, timeouts, and service disruptions.

Inconsistent Performance: The absence of rate limits can lead to inconsistent performance, making it challenging for users to rely on the API for their applications.

Common Rate-limiting Techniques

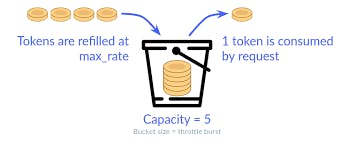

Token Bucket Algorithm:

How it works: The token bucket is an imaginary or conceptual bucket that holds a limited number of tokens. Clients are assigned tokens that are added to the bucket at a fixed rate, and each request consumes a token from the bucket to be processed. If a client has no tokens, they are rate-limited until new tokens are generated.

Advantages: The Token Bucket algorithm is flexible and can handle varying patterns of requests effectively. It provides a smooth and controlled rate-limiting mechanism, allowing bursts of requests within certain limits.

Use Cases: Token Bucket is commonly used in networking for traffic shaping, ensuring a steady flow of data. It can also be applied to scenarios where a controlled and consistent rate of processing is required.

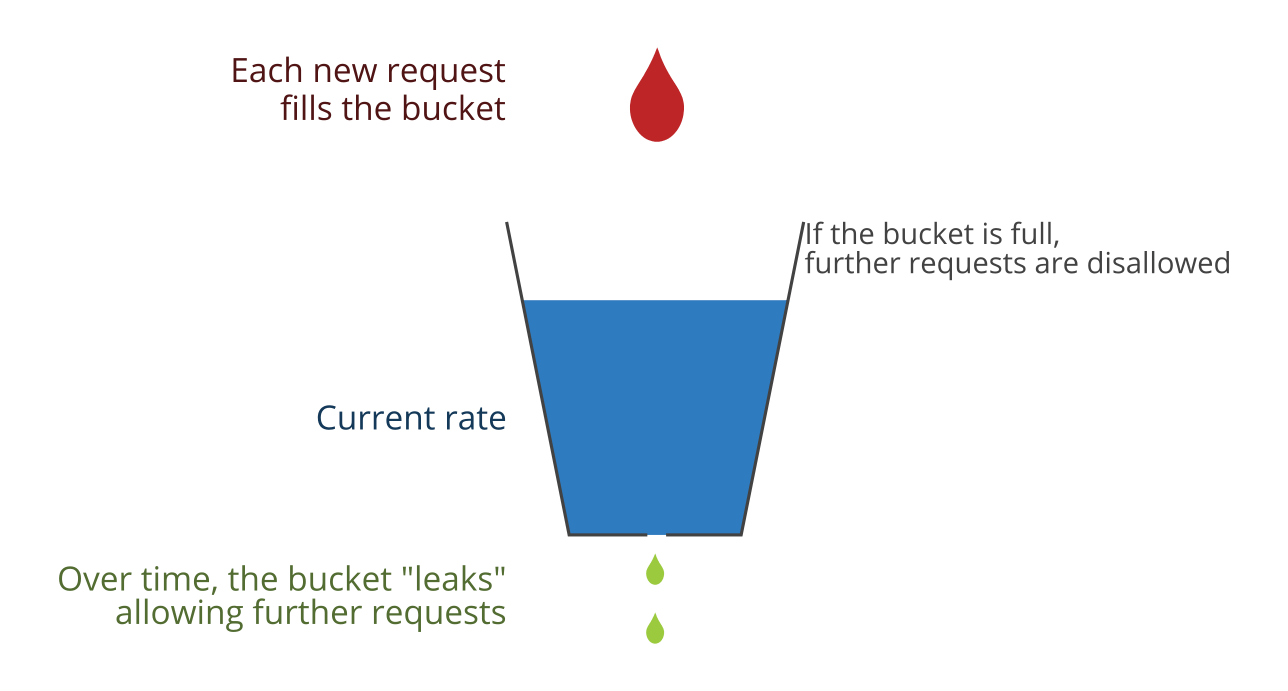

Leaky Bucket Algorithm:

How it works: The Leaky Bucket is conceptualized as a bucket that has a leak or hole at the bottom. Requests are added to the bucket at a fixed rate, and if the bucket is full, excess requests are delayed or dropped. This ensures a constant outflow of requests, preventing bursts.

Advantages: The Leaky Bucket algorithm is simple to implement compared to some other rate-limiting algorithms. It smooths out traffic and provides a constant rate of processing.

Use Cases: Like Token Bucket, Leaky Bucket is commonly used in networking for traffic shaping to regulate the flow of data and is applied in scenarios where a steady and controlled rate of processing is required.

Fixed Window Counter:

How it works: Time is divided into fixed intervals, such as seconds, minutes, or hours, Each interval is a separate time window during which the rate of requests is measured. It counts the number of requests within fixed time windows. If the count exceeds the limit, subsequent requests are rejected until the next window.

Advantages: The Fixed Window Counter is simple and easy to implement, but may be susceptible to burst traffic.

Use Cases: The Fixed Window Counter is suitable for scenarios where the rate of requests needs to be controlled on a per-time-window basis.

Sliding Window Counter:

How it works: Similar to the fixed window counter, but instead of resetting the counter at fixed intervals, the Sliding Window Counter maintains a dynamic time window that continuously moves forward. It maintains a count of requests within a moving time interval, allowing for more flexibility.

Advantages: The Sliding Window Counter better accommodates varying request patterns compared to fixed window counters and provides continuous monitoring of the request rate without abrupt resets.

Use Cases: The Sliding Window Counter is suitable for scenarios where a finer control and continuous rate-limiting approach is required, especially in the presence of bursty traffic.

Types of API Abuse Scenarios

API abuse refers to the unauthorized or malicious use of an API to exploit vulnerabilities, disrupt services, or gain unauthorized access to data. There are different types of API abuse scenarios, some of which are;

Denial-of-Service (DoS) Attacks: Overwhelming the API with a high volume of requests, causing it to become slow, unresponsive, or completely unavailable.

Scraping and Data Harvesting: Automated extraction of data from the API, often for unauthorized use or competitive purposes leading to loss of intellectual property, increased server load, and potential misuse of scraped data.

Brute-force Attacks: Repeated and rapid attempts to gain access to the API by systematically trying different combinations of credentials.

By setting appropriate rate limits and identifying/blocking malicious behavior, organizations can significantly enhance the security and performance of their APIs, safeguarding against a range of potential threats and ensuring a consistent and reliable user experience.

Conclusion

In conclusion, rate limiting is an essential mechanism that enables web servers and online services to control the number of requests a client can make within a specific period. It plays a critical role in preventing abuse, protecting server resources, ensuring fair usage, maintaining service reliability, and maintaining quality of service among all API users. Adopting common rate-limiting techniques can help organizations mitigate these challenges effectively and provide a smoother and more controlled rate-limiting mechanism.